Previously, we held a talk on predatory publishing where we shared the techniques such as using the Think. Check. Submit. checklist to avoid falling prey to predatory publishers. Besides predatory publishing, using citation metrics is also an important issue for researchers to consider.

As a researcher, you will likely use citation metrics to measure the impact of your published research at some point of your academic career. Citation metrics are useful in many scenarios, from justifying promotion and tenure, applying for grants and awards, to judging the calibre of a potential research collaborator. Although important, citation metrics are also commonly misused and misapplied, resulting in inaccurate conclusions being made about the true impact of research.

Our Research Impact Measurement librarians have compiled a list of three potential pitfalls to avoid when using citation metrics.

#1 Comparing Your H-index with the Wrong Researcher

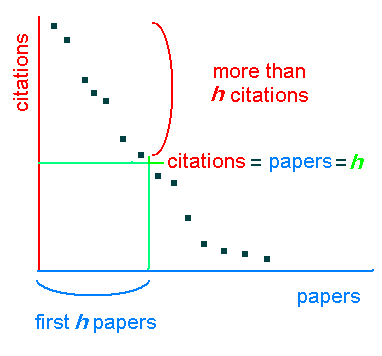

H-index is used to measure the productivity and impact of a researcher based on the number of publications and citations received. A researcher has an h-index of h if they have h number of publications where each publication has been cited at least h times. Here is a graphical representation of how h-index is calculated:

How is h-index calculated? Diagram from https://en.wikipedia.org/wiki/H-index

First, you should only compare your h-index with someone who is at a similar stage of their academic career. Early career researchers will have a lower h-index because they would have published only a small amount of publications that are still slowly gaining citations. Senior researchers would have more publications that may have already gained a substantial number of citations, and thus would naturally have a higher h-index. Comparisons between the h-index of an early career researcher and a senior researcher would be unfair.

Second, you should only compare your h-index with another researcher in a similar research field. Different research fields have varying publishing behaviours and citation rates and this would inevitably affect the researcher’s h-index. For example, a humanities researcher should not compare their h-index with someone from the field of medicine as a researcher in medicine would generally publish more articles that garner citations at a quicker rate than someone from the humanities.

#2 Using the Wrong Metric to Measure Your Research Impact

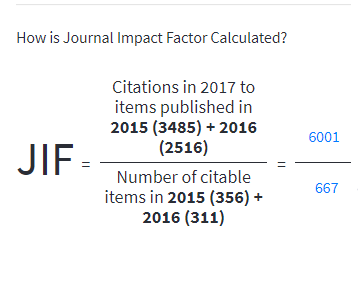

How is Impact Factor calculated? Image from https://clarivate.libguides.com/jcr

As there are many different metrics that can be used to measure the impact of a publication, it is important that a researcher understands which metrics should be used, what it means, and how it is calculated. For example, one common mistake is to measure the impact of an article using a journal metric such as Impact Factor (from Clarivate Analytics) or CiteScore (from Elsevier). Such journal metrics are used to measure the impact of a journal, i.e. simply calculated as the average number of citations received by publications over a specific time period. It is incorrect to assume a correlation between citations and such journal metrics – publishing in a high Impact Factor journal does not necessarily lead to high citations. As such, you should not evaluate an article’s citation impact based on the journal’s Impact Factor or CiteScore. This is just one example where the wrong metric is used to measure a researcher’s impact.

#3 Using Just One Metric or One Database to Show the Value of Your Research

Generally, it is advisable to show the impact of your research using several metrics to provide different perspectives. This is especially relevant when you are using metrics for important milestones such as performance reviews, promotions or benchmarking. There are a variety of metrics at the article, author, or journal-level that can be used to quantify research impact. For example, instead of only looking at citation counts for an article’s impact, you could also consider its field-weighted citation impact or altmetrics like social media mentions or policy citations.

In addition, you should also consider and look at a variety of databases and sources that can provide such metrics. Different databases such as Scopus, Web of Science or even Google Scholar indexes different journals across various subject areas and this will lead to differences in citation counts and h-index. The selection of available metrics is also different across the databases. Therefore, other than using a variety of metrics, you may also consider searching across different databases to present a more holistic view of your research impact.

Feeling overwhelmed? Do not worry! The Research Impact Measurement team at the library has curated a list of these metrics, their definitions and their sources on our library guide. You can also contact any of the librarians listed on the library guide for further assistance.

We also have a series of upcoming workshops on how you can use Web of Science, Scopus or SciVal to measure your research impact. Do join us and register for these workshops to learn more:

- Measuring Your Research Impact Using Web of Science (29 Sept)

- Measuring Your Research Impact Using Scopus (2 Oct)

- Measuring Your Research Impact Using SciVal (7 Oct)

Check out our final post for the Digital Scholarly Communications Week.